Confluence as CMS, AWS as Website Platform, ReactJS as Presentation

/This post follows up on the previous post: Migrating My Personal Website to Confluence Cloud, Transformed by AWS. As mentioned in the previous post, the current implementation did have a few limitations:

- The website would not be 100% mobile friendly.

- Existing AWS implementation still cost me some money, especially due to the AWS EC2 proxy approach. The next step would be to address these limitations.

Confluence Cloud Restful API

Confluence Cloud offers a range of useful APIs https://developer.atlassian.com/cloud/confluence/rest/, but of particular interest to me would be the “Search Content by CQL”, as well as “Get Content”. With these APIs, it would be possible to extract published content from Confluence itself, and apply a much more customised presentation.

A two step approach would be adopted:

- Search for all Confluence content to download via a specific CQL (e.g. by labels, modified time, etc)

- For each result item, make an additional call to download the detail body, and any other relevant meta data. This would minimise the initial network traffic from the search. Especially if the search in the future could compare the results for any change in last updated date, or labels.

Confluence Content Structure

It would be worthwhile to explain the content strategy I had in mind. At the moment, I had identified three content types:

- Blog articles

- General pages like about me, privacy, resume, etc

- Small page snippets that are not core to the page content, but would be embedded into multiple pages Confluence supports page and blog posts. While it would be possible to leverage on these content types, it would still be lacking a way to differentiate between embedded content and full fledge pages.

A much more flexible approach was to leverage on Confluence labels. There would be three pre-defined labels, each mapping to a type of content (content_type_blog, content_type_page, content_type_embed). This gives me flexibility in the future to add new content types as well.

Confluence Content Metadata

On top of content type, I also needed some mechanism to tag metadata to each content. Confluence supports excerpt and page properties macro out of the box, and it would be most logical to leverage on these two.

I defined the following additional page properties:

- Path: This defines the friendly URL i want the content to be published under.

- Alternative paths: This defines any other alternative friendly URLs I would want to map to this content. This would be more for ‘backward compatibility’, in the sense that if I had shared the links of this content before under another URL, this could help perform URL redirection to the new URL.

- Published date: The date the content is published. This gave me more control as compared to use the default date Confluence tracks.

- Updated date: This would be added when I had made an update to a published content, and wanted to reflect it in the website.

- Image: This image would be shown in twitter, opengraph and other embedded content.

Presentation Strategy

With the content type template defined, the next step would be to figure out how to style them. These would be the guidelines and principles I had established:

- Given a URL, all the necessary opengraph and twitter metadata should be loaded as part of the initial page load, to facilitate sharing the links on Twitter, Facebook or Linkedin.

- Layout and content should be decoupled, such that a change in layout should not cause an update to all pages.

- The main page content should be part of the initial load. Any other peripheral content should be done asynchronously. This would include all embedded page content or dynamic list of related and recommended articles. My choice of presentation technology was ReactJS, quite simply because I wanted to learn more about it, with no other particular reason. For the current iteration, only ReactJS on the browser would be explored and implemented.

My thoughts after implementing ReactJS were:

- I liked how ReactJS focused on the presentation of the data it receives, and the approach taken to promote reuse via React Components.

- However, at this point, I was not able to reuse ReactJS components across multiple javascript files, unless I used an additional tool like webpack.

- Perhaps what I had been pursuing was HTML5 Web Components, but I would leave that for another future adventure. To strike a balance between re-usability and over-complicating this small personal website project, I decided to have each content type or component had its own css and javascript files. And there would also be a set of common css and javascript files which all content would use.

At the moment, the following content type templates / pages were identified:

- Template for Blog Content Type

- Template for Page Content Type

- Template for Embedded Content Type (just pure raw content body)

- Default Landing Page

- Listing Page for all blog content

- Listing Page for blog content with specific label

Page Loading with AWS Technologies

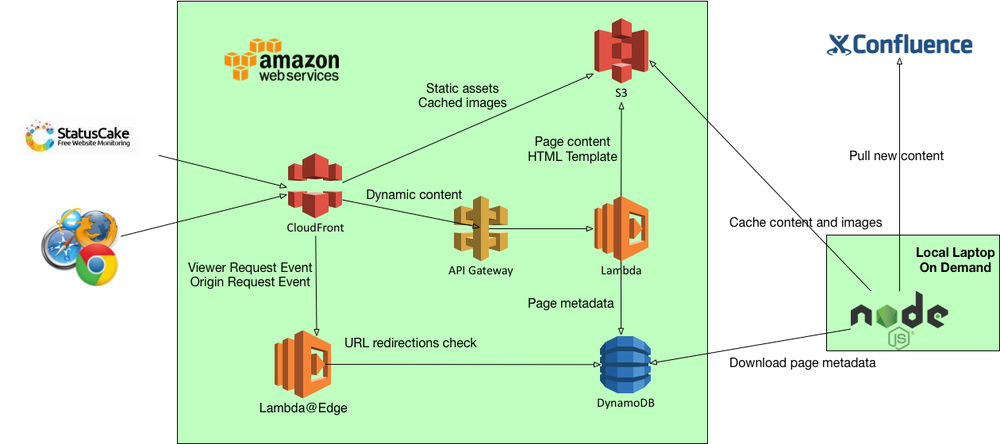

This would be a high-level flow of how a page of this website would be loaded. All other pages would follow a similar flow.

- The url request would reach our configured CloudFront distribution.

- The CloudFront Viewer Request Event would then trigger a Lambda function, to see if the url matches any of the configured alternative paths. If it matches, a 302 redirect would be sent back to the browser. The alternative paths would be stored in a DynamoDB table, which was built up by pulling and parsing the meta-data from the Confluence pages.

- The CloudFront Origin Request Event would be triggered next, where a Lambda function could do URL rewriting, for example rewriting /writings/ to /writings/index.html.

- The CloudFront Behaviour would then trigger the backing origin. In some cases it would just be an S3 Bucket of css, js, html, image assets. In other case it would be an API Gateway EndPoint. For the /writings/ or /writings/labels/ API Gateway Endpoints, they would trigger a Lambda function, which simply load the corresponding index.html from the S3 Bucket, e.g. /writings/index.html, or /writings/labels/index.html.

- In the event that this was a genuine dynamic content, for example /writings/article1, a Lambda function would be triggered that performed the following:

- Load the metadata of the content from DynamoDB, based on the url.

- Load the content from the S3 Bucket

- Load the HTML template file from the S3 Bucket based on the content type

- Merge the content and its corresponding metadata into the template

- Return the merged content

- After the page was loaded, other dynamic content could be triggered, for example embedding another content, or retrieving a list of featured posts, etc

The final version had the API Gateway performed the content merging with the content template. This had not been the original plan, which was to leverage on S3 events and Lambda triggers to transform downloaded content into another bucket to improve website efficiency. The drawback of that was template changes required re-generation of all impacted pages. That proved to be too huge an operational overhead, and so the idea was dropped.

**Note: Take note that in order for CloudFront to front an API Gateway, CORS must be implemented! API Gateway custom domains were not necessary.

Error Page Rendering

I was handling the error page rendering initially within the Lambda functions, until I realised that CloudFront could associate HTTP error codes with a custom error page from my S3 Bucket. This was a much cleaner way to manage error page rendering.

Extracting Confluence Content into AWS Storage

All content served were extracted from Confluence and cached into the following:

- DynamoDB: Mappings from Alternative Paths to actual Path, Mappings from Labels to Content, and Mappings from actual Path to Content themselves.

- S3 Buckets: Statics website assets, and the Content and Cached Content Image.

It was not trivial trying to use DynamoDB, especially if one had came from a traditional SQL background. Figuring out queries, scans, index and projections took a while. Updating existing data was a pain as well due to the asynchronous nature of using the API. A single content update required me to delete data from 3 tables and then adding it back. It did not give us the a ability to do cascading deletes. Definitely not using DynamoDB in the right way.

In additional, each new DynamoDB tables/indexes and Lambda added some CloudWatch metrics and alarms, which quickly went beyond the free tier allowance. After going back to review the default metrics, I made a conscious decision to simply eliminate all of them. They might be useful in a real world production operations, but not for a personal website.

The current setup would be such that the extraction happens via a nodejs script from my local laptop. In the future, this could be migrated to AWS Lambda + API Gateway + Cognito for a more online or maybe even automated content publishing experience.

Final Architecture

Putting all the pieces together, this was the final architecture.

The final architecture leveraged heavily on AWS free tiers, specifically:

- Always free 1 million Lambda calls

- Always free 25GB DynamoDB

- 12 months free 1 million API Gateway calls

- 12 months free 50GB Cloudfront

- 12 months free 5GB S3

At the moment, the only AWS bill that came was a 0.12 USD bill on AWS Lambda@Edge (there was no free tier for this!!!!). All else were free. So on top of the monthly Confluence Cloud subscription, my website cost did went down significantly (could be lower if I tried to host my own Confluence, either in my home and publish to AWS, or spin up a t2.micro EC2 instance and run Confluence when I needed to).

Concluding Thoughts

The website was far from finished. There were additional features I would like to put in place, but for now I had explored whatever I could on AWS with this particular ‘project’. It offers an extremely low cost option to hosting a website, and I would definitely be coming up with other projects to learn more AWS technologies. The focus would of course be for free tiers, specifically:

- Amazon Cognito for secured user access

- Amazon SES and Amazon SNS to send notifications

- Amazon SQS, SWF for workflow

- Amazon CodeBuild, CodeCommit, CodePipeline for CI/CD

But for now, it had been a fun learning experiment and experience.